Sometimes, the most profound insights come from unexpected moments. While waiting for my Thanksgiving ham to cook, I found myself pondering a question that would lead to a revealing experiment about AI governance. As an IEEE CertifAIEd Lead Assessor, I’ve evaluated numerous AI implementations, but this holiday experiment in my kitchen would challenge some fundamental assumptions about AI capabilities.

From Kitchen Table to Enterprise Insights

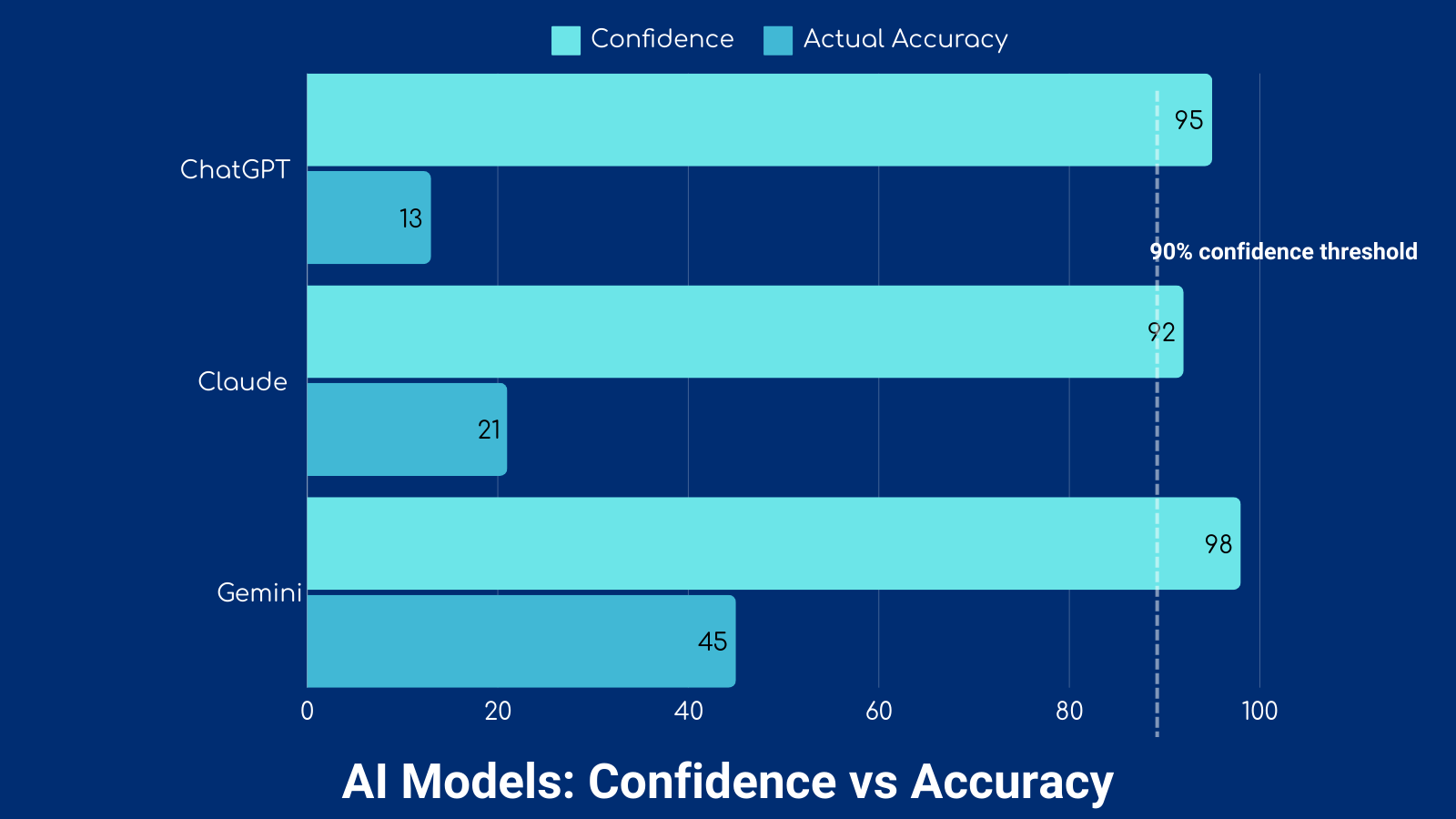

The Quartiles puzzle seemed simple enough – combine letter tiles to create words while following precise rules. Yet when I tested three leading AI models, the results were concerning. Not just because of their low accuracy (ChatGPT at 13%, Claude at 21%, and Gemini at 46%) but also their unwavering confidence despite these failures.

This disconnect between confidence and competence mirrors what I’ve witnessed in enterprise settings. Throughout my career assessing AI implementations, I’ve seen this pattern repeatedly: systems displaying high confidence while missing critical operational rules.

Bridging Theory and Practice: A Framework Born from Experience

Drawing from both this experiment and years of enterprise assessment experience, I’ve developed a practical framework for ensuring reliable AI governance. This isn’t just theoretical – it’s battle-tested through real-world implementations and refined through countless hours of observation and analysis.

The Four Pillars of Effective AI Governance

- Assessment: Starting with Clear Eyes My experience has taught me that a thorough initial assessment is crucial. Just as our Quartiles experiment provided a clear baseline, your organization needs to understand exactly where your AI systems stand regarding rule-following capabilities.

- Rule Implementation: Building Strong Foundations Through years of certification work, I’ve learned that clear protocols aren’t just documentation – they’re your organization’s safety net. Define not just what success looks like but what triggers human intervention.

- Validation: Trust but Verify The Quartiles experiment showed how crucial regular testing is. Your validation process should be continuous and comprehensive, catching discrepancies before they become problems.

- Human Oversight: Maintaining the Human Element Perhaps the most crucial lesson from my years in AI governance is that human oversight isn’t just a checkbox – it’s an essential component of responsible AI implementation.

Looking Forward: A Practical Implementation Path

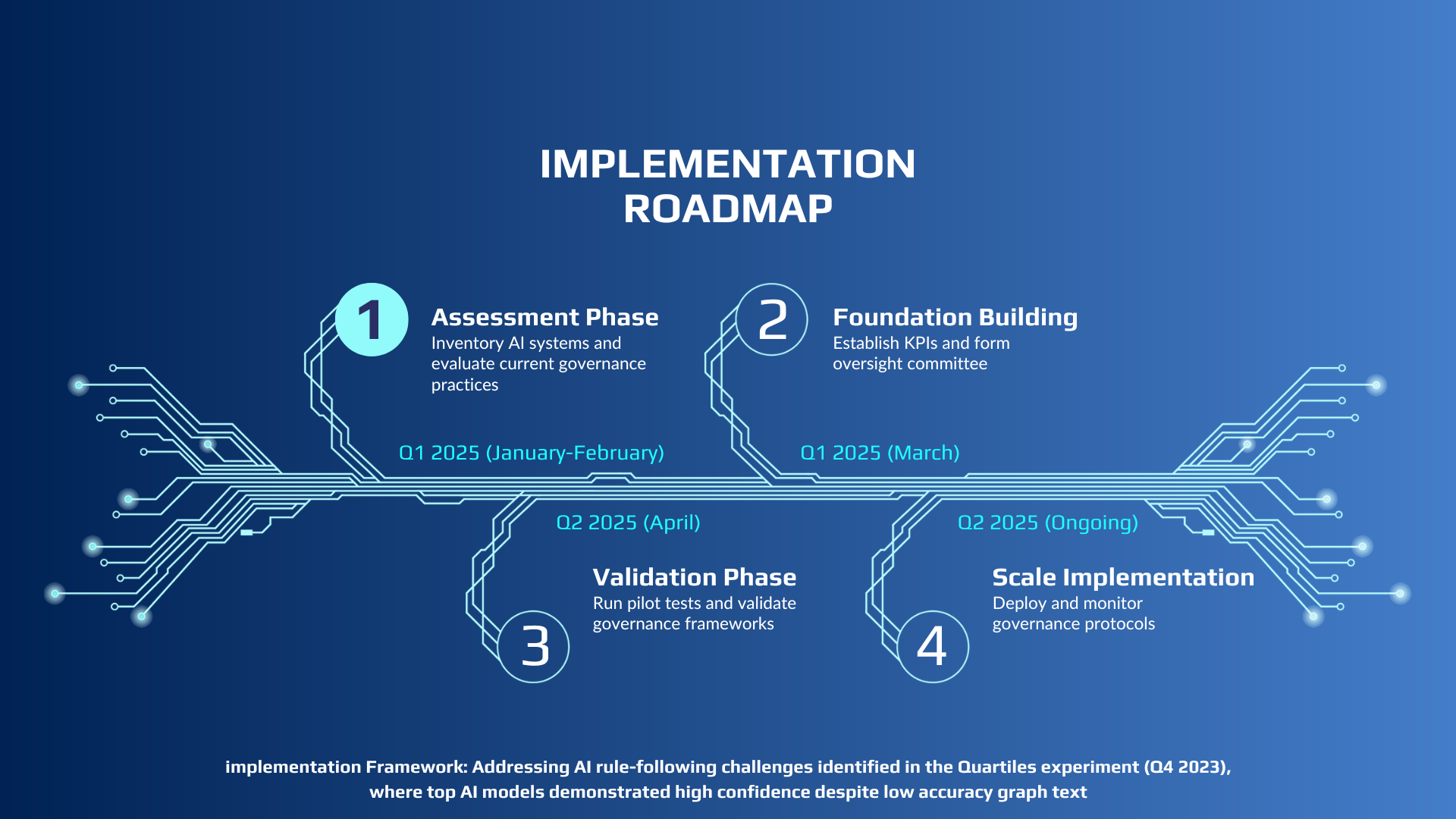

Having guided numerous organizations through AI governance implementation, I’ve developed a timeline that balances ambition with practicality. Here’s how I recommend approaching the next 6 months:

Q1 2025 (January-February): Assessment Phase

Start where we started with the Quartiles experiment – establish your baseline understanding. What are your AI systems’ current capabilities and limitations?

Q1 2025 (March): Foundation Building

This is where theory meets practice. Establish your governance framework based on real-world requirements and capabilities.

Q2 2025 (April): Validation Phase

Test your framework, like our Quartiles experiment, but scaled to your enterprise needs.

Q2 2025 (Ongoing): Scale Implementation

Roll out your governance framework thoughtfully and systematically, maintaining flexibility for adaptation.

Personal Reflections and Professional Insights

Through this journey from a holiday experiment to enterprise implementation strategies, I’ve learned that effective AI governance isn’t just about technical frameworks – it’s about understanding the intersection of human oversight and artificial intelligence.

Take Action: Moving from Insight to Implementation

- Start with Understanding: Download our AI Governance Assessment Tool – it’s built from real testing experience and practical implementation insights.

- Build on Experience: Schedule a consultation to discuss your specific governance needs. Let’s translate these insights into action for your organization.

- Stay Informed: Subscribe for Part 3 of our series, where we’ll explore future-proofing your AI governance strategy.

A Personal Note on Moving Forward

As we continue this journey together, remember that effective AI governance isn’t about perfect solutions but thoughtful implementation and continuous learning. The Quartiles experiment taught me that sometimes, the simplest tests reveal the most profound insights.

Stay tuned for Part 3, where we’ll explore how to build resilient, future-proof governance frameworks based on these foundational insights.

About the Author: As an IEEE CertifAIEd Lead Assessor and founder of Ethical Tech Matters, I’ve dedicated my career to helping organizations implement effective AI governance frameworks. Connect with me on LinkedIn or visit www.ethicaltechmatters.com to learn more about our approach to ethical AI implementation.