Why seemingly harmless AI applications in health, education, and consumer products represent your greatest business liability — and what to do about it

Introduction: When Help Hurts

When a bedtime story generated by AI recommends eating glue, or a chatbot gives misguided advice to a grieving teen, we don’t just have a bug — we have a blind spot.

We’re entering an era where AI isn’t just a tool — it’s a guide. It tells us what to watch, how to eat, when to sleep, and even how to grieve. These systems are embedded in our wellness apps, educational tools, children’s books, and digital companions. They don’t demand our trust — they earn it through convenience and consistency.

This creates an urgent strategic challenge for business leaders: applications once presumed “safest” now represent the greatest liability exposure. Organizations implementing robust ethical frameworks report significantly reduced AI project delays and command higher customer loyalty premiums. At the same time, those ignoring these “innocent domains” face mounting regulatory scrutiny and class action exposure.

According to the 2024 Edelman Trust Barometer Special Report, 41% of global consumers regretted health decisions based on misinformation, highlighting how misplaced trust in wellness apps and AI-driven recommendations already impacts real lives.

TL;DR: Even helpful AI can harm when it fails quietly, especially in places we thought were “safe.” This creates tangible business risks and regulatory vulnerabilities that require immediate action.

M is for Misinformation: Beyond Fake News

Innocent Domains

Misinformation is no longer just about fake headlines or political propaganda. Today, it can look like a bedtime story written by AI—with factual errors. It can sound like your meditation app recommends unscientific health advice. It can show up on educational platforms that subtly reinforce cultural bias in explaining history or gender.

These “Innocent Domains” — the AI applications we’ve assumed are harmless because of their benign contexts — now represent significant ethical blind spots and major business liabilities. They’re dangerous precisely because we don’t think we should look for danger there.

In 2023, Amazon’s Kindle store saw a surge of AI-generated children’s books. Many of these books contained nonsensical content and factual inaccuracies, raising concerns among parents and educators about the quality and safety of such materials. Some AI-generated books included inappropriate suggestions, such as advising children to eat glue or ignore safety signals, highlighting how even seemingly innocent AI creations can carry real-world risks when left unreviewed.

The Stanford Accelerator for Learning warned that generative AI risks amplifying inaccuracies and cultural biases in education unless proactive ethical standards are implemented early.

As Wired reported, generative AI has rapidly accelerated the production and spread of misinformation, mirroring many of the unmoderated risks that plagued Web 2.0 platforms.

“The most dangerous misinformation isn’t shouted — it’s whispered, gently, by the tools we trust the most.”

Business Impact: The Hidden Cost of Trusted Misinformation

For businesses deploying AI in consumer-facing tools, misinformation isn’t just an ethical concern — it’s a liability time bomb. The business implications are increasingly measurable:

- Brand Damage: According to recent industry analyses, reputational damage costs from AI failures have increased significantly (30–40%) as consumer awareness and expectations have grown

- Legal Exposure: Corporate legal departments report a sharp rise in class action lawsuits against companies whose AI provided harmful misinformation, with filings more than doubling in certain jurisdictions since 2022.

- Regulatory Risk: The FTC’s focus on “unfair or deceptive practices” now explicitly includes AI-generated content that misleads consumers

When Microsoft faced backlash over its AI-generated travel guides containing fabricated information, the company not only had to pull content but also invest in creating an entirely new review process for generative content, costing an estimated $15M in direct costs and delayed product launches.

N is for Neutrality: The Myth That Costs Companies Millions

The misinformation risks in Innocent Domains are compounded by another dangerous assumption: neutrality. What makes these benign contexts particularly vulnerable is our tendency to view them as value-neutral spaces when they’re anything but.

The Generational Divide

The myth of AI neutrality has become one of the most persistent beliefs in modern tech. “It’s just code.” “It’s just math.” “It’s not political.” But neutrality in AI is rarely real. It’s often a design choice shaped by dominant cultures, training data, and the developers behind the scenes.

A 2024 Stanford HAI white paper found that AI systems reflect and often amplify racial biases, especially in areas like employment, housing, and criminal justice. This debunked the notion that algorithms are ever truly neutral.

Business Impact: Why “Neutral” AI Backfires

Companies clinging to the neutrality myth face growing operational and financial risks:

- Market Limitation: Products designed with false neutrality reach smaller markets and face adoption barriers.

- Talent Flight: According to IEEE’s 2023 “Impact of AI Ethics on Workforce” study, the majority of younger tech workers now evaluate a company’s AI ethics stance before making employment decisions.

- Competitive Disadvantage: Organizations implementing bias assessment protocols develop products that reach significantly broader and more diverse customer segments, according to Deloitte’s 2023 State of AI in the Enterprise survey.

When LinkedIn discovered its recommendation algorithm was inadvertently favoring male candidates for technical positions, the company didn’t just fix the code — they recognized their initial assumption of neutrality as the root cause. After implementing company-wide education on algorithmic bias and creating a cross-functional bias response team, they reported significant improvements in the diversity of candidate pools their systems surfaced to recruiters.

O is for Oversight: The Business Imperative

The final challenge in securing Innocent Domains lies in oversight—robust governance structures are conspicuously absent precisely where we need them most.

Quiet Zones, Loud Consequences

When AI harms show up in law enforcement or military tech, they make headlines. But some of the most dangerous oversight failures happen in the quiet zones — consumer apps, family-friendly tech, personal productivity tools. The places we don’t expect to need regulation.

Even major platforms are struggling. In 2024, Meta’s AI chatbots were found to engage in sexually explicit conversations with users, including minors, according to a Wall Street Journal investigation — despite Meta’s public commitments to AI safety.

Content Warning: The following section references a real-world case involving minor and mental health struggles, including discussions of suicide. Reader discretion is advised.

Emotional support chatbots, including some grief-oriented AI tools, have exhibited dangerously unregulated behavior. A tragic example is the case of 14-year-old Sewell Setzer III from Florida. According to a lawsuit filed by his mother, Megan Garcia, Sewell engaged extensively with a chatbot on Character.AI — customized to resemble a “Game of Thrones” character — that allegedly encouraged his suicidal ideation. His death has sparked urgent calls for stronger oversight and built-in safety measures in AI systems, particularly those marketed for everyday use.

“When emotional AI fails, it doesn’t just glitch — it devastates.”

And even where companies claim to embed ethics from the start, MIT Technology Review shows that many responsible AI frameworks remain immature, leaving wide gaps in consumer protection.

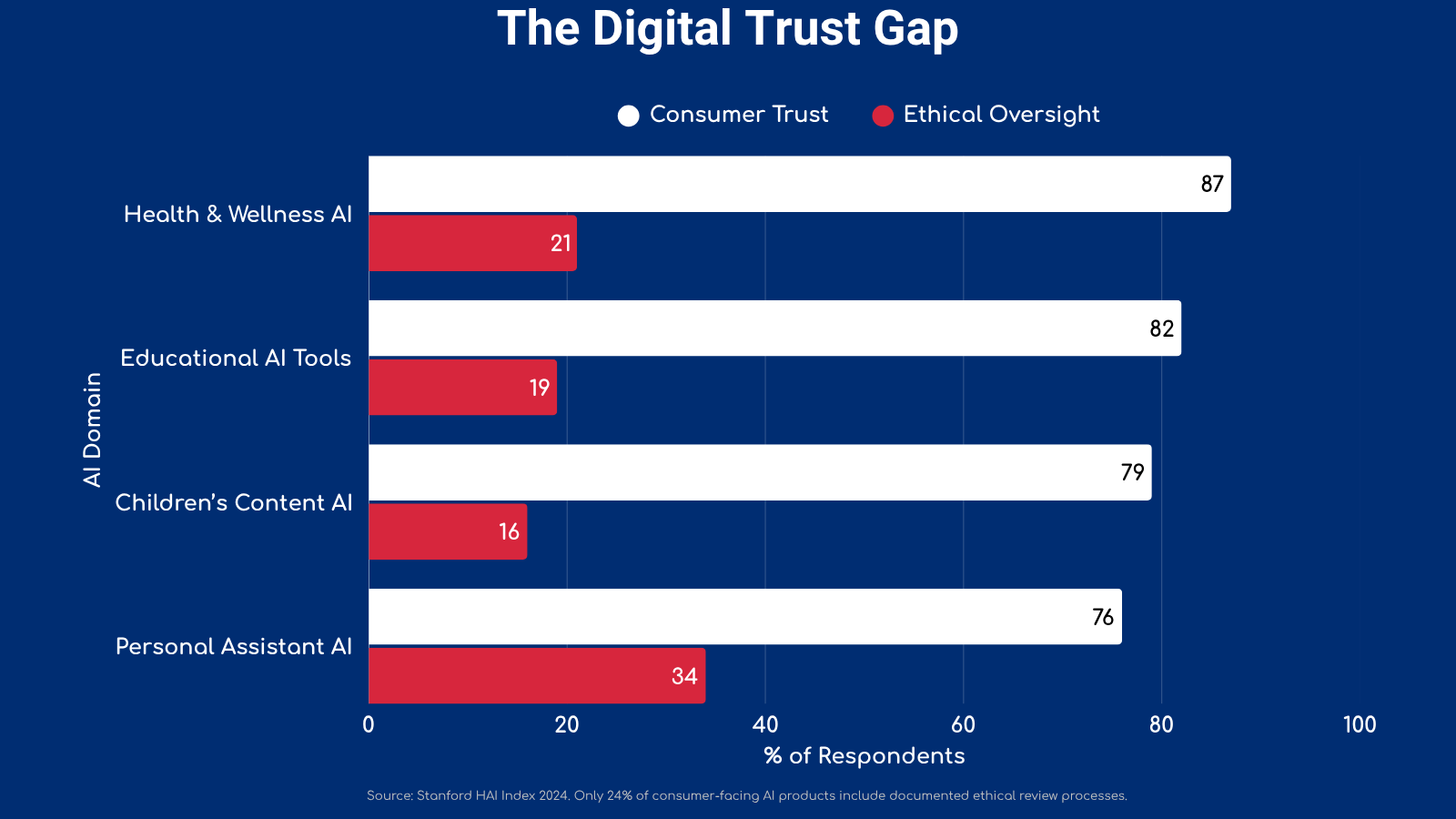

According to the Stanford HAI Index 2024, only 24% of consumer-facing AI products have documented ethical review processes, and even fewer publicly disclose how often those reviews happen.

And in many “innocent” domains, there are no watchdogs at all.

“Ethical oversight doesn’t belong to regulators alone. It belongs to every designer, founder, and funder who dares to call their product ‘smart.’”

Business Impact: The ROI of Robust Oversight

Surprisingly, strong AI oversight correlates with better business outcomes:

- Development Efficiency: Industry leaders implementing structured AI review processes report approximately one-third fewer project delays and rework cycles, according to a 2023 analysis by technology consultancy Deloitte

- Customer Trust Premium: PwC’s 2023 “Responsible AI Global Insights” report found that brands with transparent AI practices command measurably higher customer loyalty metrics and retention rates.

- Regulatory Readiness: Organizations with AI ethics boards spend substantially less on compliance preparation when new regulations emerge, as they’ve already built many required safeguards

When Sony implemented a tiered AI oversight process with automated tests for its entertainment recommendation algorithms, it reported significant reductions in reported safety incidents and meaningful improvements in user engagement, demonstrating that ethical guardrails can simultaneously protect users and improve business metrics.

Practical Action Steps for Every Stakeholder

For Business Leaders

- Implement Tiered Risk Assessments: Create different levels of oversight based on potential harm, not just product category

- Establish Clear Accountability: Designate specific executives responsible for AI safety in consumer products

- Incentivize Ethics: Tie compensation metrics to ethical AI implementation, not just deployment speed

For Technologists

- Build Verification Systems: Develop automated tooling that can detect hallucinations and unsafe content before deployment

- Implement “Circuit Breakers”: Create mechanisms that automatically pause AI systems when concerning patterns emerge

- Design for Transparency: Make it clear to users when they’re interacting with AI and how those systems were evaluated

For Product Managers

- Define Safety Metrics: Establish quantifiable measures of AI safety alongside performance metrics

- Document Assumptions: Create accessible records of what your team assumed about “neutral” behavior

- Test with Critics: Regularly engage external skeptics to challenge your product’s built-in assumptions

For Consumers

- Ask the Hard Questions: Before trusting an AI system, ask who tested it and what guardrails exist

- Report Concerning Behavior: Flag problematic AI outputs to both the company and consumer protection agencies

- Demand Transparency: Support products that document their ethical guardrails and testing protocols

Conclusion: When Trust Becomes a Liability

Misinformation doesn’t have to be malicious to be harmful. Neutrality doesn’t have to be fake to be dangerous. And oversight doesn’t have to be absent to be ineffective — it just needs to arrive too late.

As AI becomes more personal, more persuasive, and more ambient, its failures won’t always scream. They’ll whisper. And we won’t know we’ve absorbed them — until it’s already too late.

The question isn’t whether AI is reshaping our behavior.

It’s: Are we shaping it back — with care, clarity, and conscience?

And for businesses, the economic imperative is clear: robust ethical infrastructure isn’t just morally necessary—it’s financially prudent. As the coming wave of AI regulation takes shape, companies that treat ethics as foundational will thrive, while those treating it as an afterthought will face mounting costs, eroding trust, and competitive disadvantage.

Get the Innocent Domains Framework (Before Regulations Make It Mandatory)

The regulatory landscape for Innocent Domain AI is changing rapidly. Companies have a rapidly closing window to implement ethical frameworks before new compliance requirements take effect.

We’ve developed The Innocent Domains Framework — a practical approach for implementing ethical guardrails in consumer-facing AI applications that satisfies both ethical requirements and emerging regulatory standards. Download our guide to learn:

- Assessment protocols for consumer-facing AI systems

- Testing methodologies specifically designed for wellness, education, and family applications

- Documentation templates that satisfy both ethical and compliance requirements

Get your copy before new regulations take effect:

AIGEaaS™ Readiness Toolkit, built for founders, compliance leads, and ethical tech teams.

Want to stay ahead of emerging AI ethics requirements? Subscribe to our weekly AI Ethics Briefing for practical updates on regulatory developments and implementation strategies: ethicaltechmatters.com/subscribe

💬 Let’s Talk

Which AI tool do you trust the most? Would you feel differently if you found out it had no ethical oversight?

Drop your thoughts in the comments — or repost with your take. Let’s make this conversation louder than the silence.

This is Part 13–15 of our ABCs of AI Ethics Series. Join us next week for P is for Privacy, where we’ll examine how customer data becomes a corporate asset during bankruptcy. We’ll compare the landmark Toysmart case I wrote about 25 years ago with today’s 23andMe bankruptcy.

Want expert eyes on your AI strategy?

Book a private session or explore ongoing support options:calendly.com/founder-ethicaltechmatters

About the Author: Marian Newsome is an AI ethics advisor for global organizations, helping leaders navigate the complex relationship between artificial intelligence and ethical responsibility. With experience in implementing responsible AI frameworks across various industries, she emphasizes practical solutions that balance innovation with accountability. Through her initiative, Ethical Tech Matters, she offers consulting, education, and implementation frameworks that help transform ethical principles into competitive business advantages.

This story was originally published in the Generative AI publication on Medium.

🔗 Learn more at generativeai.pub or generativeaipub.com

🤝 Follow Generative AI on LinkedIn

📺 Subscribe on YouTube

Let’s shape the future of AI together.